Updated at 12:44 p.m. ET on November, 23 2021

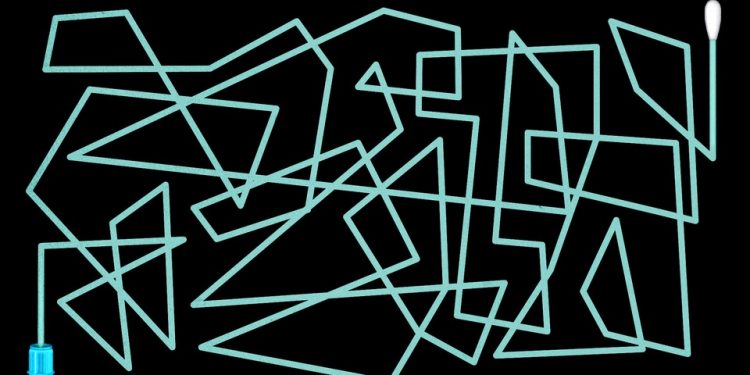

In a world with perfect coronavirus tests, people could swab their nose or spit in a tube and get near-instant answers about their SARS-CoV-2 status. The products would be free, fast, and completely reliable. Positives would immediately shuttle people out of public spaces and, if needed, into treatment; negatives could green-light entry into every store, school, and office, and spring people out of isolation with no second thought. Tests would guarantee whether someone is contagious, or merely infected, or neither. And that status would hold true until each person had the chance to test again.

Unfortunately, that is not the reality we live in—nor will it ever be. “No such test exists,” K. C. Coffey, an infectious-disease physician and diagnostics expert at the University of Maryland, told me. Not for this virus, and “not for any disease that I know of.” And almost two years into this pandemic, imperfection isn’t the only testing problem we have. For many Americans, testing remains inaccessible, unaffordable, and still—still!—ridiculously confusing.

Contradictory results, for instance, are an all-too-common conundrum. Cole Shacochis Edwards, a nurse in Maryland, discovered at the end of August that her daughter, Alden, had been exposed to the virus while masked at volleyball practice. Shacochis Edwards rapid-tested her family of four at home, while the high school ran a laboratory PCR on Alden. One week, 11 rapid antigen tests, 3 PCRs, and $125 later, their household was knee-deep in a baffling array of clashing results: Alden tested negative, then positive, then negative again, then positive again, then negative again; her father tested negative, then positive, then positive, then negative; Shacochis Edwards, who tested three times, and her son, who tested twice, stayed negative throughout. “None of it was clear,” she told me. Months after their testing saga, Shacochis Edwards is pretty sure the positives were wrong—but there’s simply no way to know for sure.

Some conflicting results are just annoying. Others, though, can be a big problem when people misguidedly act on them—unknowingly sparking outbreaks, derailing treatment, and squandering time and resources. And the confusion doesn’t stop there. The tests come in an absurd number of flavors and packages, with subtle differences between brands. They’re deployed in a disorienting variety of settings: doctors’ offices, community testing sites, apartment living rooms, and more. They’re being asked to serve several very different purposes, including diagnosis of sick patients and screening of people who feel totally symptom-free.

Our tests are imperfect—that’s not going to change. The trick, then, is learning to deal with their limitations; to rely on them, but also not ask too much.

Tests can tell us only whether they found bits of the virus, at a single point in time.

Tests are virus hunters. The best ones are able to accomplish two things: accurately pinpointing the pathogen in a person who’s definitely infected—a metric called sensitivity—and ruling out its existence in someone who’s definitely not, or specificity. Tests with great sensitivity will almost never mistake an infected person for a virus-free one—a false negative. High specificity, meanwhile, means reliably skirting false positives.

Our tests accomplish this in two broad ways. They search for specific snippets of the virus’s genetic material, putting them in the category of molecular tests, or find hunks of pathogen protein, which is the job of antigen tests. (Most of the rapid tests you can find in stores are antigen, while PCR tests are molecular.) Both types will make mistakes, but whereas molecular tests repeatedly copy viral genetic material until it’s detectable, making it easier to root out the pathogen when it’s quite scarce, antigen tests just survey samples for SARS-CoV-2 proteins that are already there. That means they’re likelier to miss infections, especially in people with no symptoms.

Even super-sensitive, super-specific tests can spit out more errors when they’re mishandled, or when people swab themselves sloppily. That can be pretty easy to do when instructions differ so much among brands, as they do for rapid antigen tests. (Wait 24 hours between tests! No, 36! Swivel it in your nose four times! No, five! Dip a strip in liquid! No, drop the liquid into a plastic strip! Wait 10 minutes for your result! No, 15!)

Random substances can also dupe certain tests: Soda, fruit juice, ketchup, and a bunch of other household liquids have produced rapid-antigen false positives, an oopsie that some kids in the United Kingdom have apparently been gleefully exploiting to recuse themselves from school. Manufacturing snafus can also trigger false positives, as recently happened with Ellume, a company that sells rapid antigen tests and had to recall some 2 million of them in the United States. (Sean Parsons, Ellume’s CEO, told me that the issue is now under control and that his company is “already producing and shipping new product to the U.S.”)

Even when they’re perfectly deployed, tests can detect bits of the virus only at the moment a sample is taken. Testing “negative” for the virus isn’t some sort of permanent identity; it doesn’t even guarantee that the pathogen isn’t there. Viruses are always multiplying, and a test that can’t find the virus in someone’s nose in the morning might pick it up come afternoon. People can also contract the virus between the tests they take, making a negative, then a positive, another totally plausible scenario. That means a test that’s taken two days before a Thanksgiving gathering won’t have any bearing on a person’s status during the event itself. “People want tests to be prospective,” Gigi Kwik Gronvall, a senior scholar at the Johns Hopkins Center for Health Security, told me. “None are.”

Tests can serve a ton of different purposes.

Recently, I asked more than two dozen people—co-workers, family members, experts, strangers on Twitter—what they envisioned the “perfect” coronavirus test to be. The answers I got were all over the place.

People wanted tests that were cheap and accessible (which they’re currently not), ideally something that could give them a lightning-fast answer at home. They also, unsurprisingly, wanted totally accurate results. But what they wanted those results to accomplish differed immensely. Some said they’d test only if they were feeling unwell, while others were way more interested in using the tests as routine checks in the absence of symptoms or exposures, a tactic called screening, to reassure them that they weren’t infectious to others.

At least for now, certain tests will be better suited to some situations than others. “The best test to use depends on the question you’re asking of it,” Coffey told me. When someone’s sick or getting admitted into a hospital, for instance, health-care workers will generally reach first for the most precise, sensitive test they can get their hands on. A missed infection here is high-stakes: Someone could be excluded from a sorely needed treatment, or put other people at risk. But lab tests are inconvenient for the people who take them, and very often slow. Samples have to be collected by a professional, then sent out for processing; people can be left waiting for several days, during which their infection status might have changed.

Using a rapid test can be much more convenient, especially if people feel unwell at home—and these tests do work great for that. But things get hairier when these products are used for screening purposes. Asymptomatic infections are a lot harder to detect in general, because there’s no obvious bodily signal to prompt a test. “You’re essentially randomly sampling,” which means more errors will inevitably crop up, Linoj Samuel, a clinical microbiologist at the Henry Ford Health System in Michigan, told me. To patch this problem, the FDA has green-lit several rapid tests that tell users to administer them serially—at least once every couple of days. A test that misses the virus one day will hopefully catch it the next, especially if levels are rising.

But for those hoping to narrow in on the people who are carrying the most virus in their airway—and probably pose the biggest contagious risk to others—rapid antigen tests might be enough to do the trick precisely because they are less sensitive. They won’t catch all infections, but not all infections are infectious; a positive antigen result, at least, could be a decent indication that someone should stay home, even if they’re feeling perfectly fine. That logic isn’t airtight, though. Antigen-positive is not precisely synonymous with infectious; antigen negatives cannot guarantee that someone is not. “For SARS-CoV-2, we don’t know the threshold—how much virus you need to be carrying” to be contagious, Melissa B. Miller, a clinical microbiologist at the University of North Carolina at Chapel Hill, told me. People on the border of positivity, for instance, might still transmit.

Many tests weren’t designed for one of the most basic ways we are using them.

People are turning to testing for asymptomatic check-ins that can give them peace of mind before a big event, or even give them the go-ahead to travel overseas. But a lot of these screening tests were initially designed to diagnose people who were already sick—and the tests’ performance won’t necessarily hold when they’re being repeatedly used on symptom-free people at home.

Part of the problem can be traced back to how the United States’ thinking on testing has evolved. Early on in the pandemic, regulatory agencies like the FDA prioritized tests for symptomatic patients; the agency has since noticeably shifted its stance, authorizing dozens of tests that can now be taken at home. But there are still some relics that have influenced how the tests have, and have not, been evaluated for use.

Tests such as the Abbott BinaxNOW, for example, were first studied as a rapid diagnostic that people could take shortly after their symptoms first appeared. It can now be used as a screener, when it is serially administered at home to asymptomatic people (which is why the tests are sold in packs of two). But to nab that expanded authorization from the FDA, the company didn’t have to submit any data on the test’s performance when it was serially administered at home, or how well it worked in asymptomatic people. Instead, the FDA has been green-lighting serial tests based on how well their results match up to PCR results in symptomatic people. They just have to detect 80 percent of the infections that the super-sensitive molecular tests do, in a clinical setting.

I asked the FDA why that was. “The FDA does not feel that requiring specific serial-testing data from each manufacturer is necessary due to the current state of knowledge on serial testing,” James McKinney, a spokesperson, told me. (Some companies that already have products for sale, including Abbott and Becton, Dickinson, are collecting additional data now under FDA advisement.)

The repurposing of tests feels a little weird, experts told me. “I don’t see how you can reuse the same data, for very different goals,” Jorge Caballero, a co-founder of Coders Against COVID, who’s been tracking coronavirus-test availability and performance, told me. That doesn’t mean these tests are useless if you don’t have symptoms. But without more evidence, we’re still determining exactly what they’re able to tell us when we self-administer them once, twice, or more, even as we’re feeling fine.

Test results can tell us only so much.

The results produced by a coronavirus test aren’t actually the end of the testing pipeline. Next comes interpretation, and that’s a nest of confusions in its own right. Sure, tests can be wrong, but the likelihood that they are wrong changes depending on who’s using them, how, and when. People don’t always talk about what to do when they’re shocked by a result—but that sense of surprise can sometimes be the first sign that the test’s intel is wrong. “People should have some confidence on how likely it is they have the disease when they test,” Coffey told me. “Ideally, the test should confirm what you already think.”

Consider, for example, an unvaccinated person who starts feeling sniffly and feverish five days after mingling unmasked with a bunch of people at a party, several of whom tested positive the next day. That person’s likelihood of having the virus is pretty high; if they test positive, they can be pretty sure that’s right. Random screenings of healthy, vaccinated people with no symptoms and no known exposures, meanwhile, are way more likely to be negative, and positives here should raise at least a few more eyebrows. Some will be correct, but truly weird results may warrant a re-check with a more sensitive test.

Yet another wrinkle has become particularly relevant as more and more people get vaccinated. Tests, which look only for pieces of pathogens, can’t distinguish between actively replicating virus that poses an actual transmission threat, and harmless hunks of virus left behind by immune cells that have obliterated the threat. A positive test for a vaccinated person might not mean exactly the same thing it does in someone who hasn’t yet had a shot—maybe, positive for positive, they’re less contagious. That’s not to say that noninfectious infections aren’t still important to track. But positives and negatives always have to be framed in context: when and why they’re being taken, and also by whom.

Tests will have to be part of our future, for as long as this virus is with us. But understanding their drawbacks is just as essential as celebrating their perks. Unlike masks and vaccines, which can proactively stop sickness, tests are by default reactive, catching only infections that have already begun. In and of themselves, they “don’t stop transmission,” Coffey told me. “It’s about what you do with the test. If you don’t do anything with the result, the test did nothing.”

This article previously misstated the type of coronavirus test manufactured by the company Ellume.

Source by www.theatlantic.com