It’s an old-fashioned idea that drivers manage their cars, steering them straight and keeping them out of trouble. In the emerging era of smart vehicles, it’s the cars that will manage their drivers. We’re not talking about the now-familiar assistance technology that helps drivers stay in their lanes or parallel park. We’re talking about cars that by recognizing the emotional and cognitive states of their drivers can prevent them from doing anything dangerous.

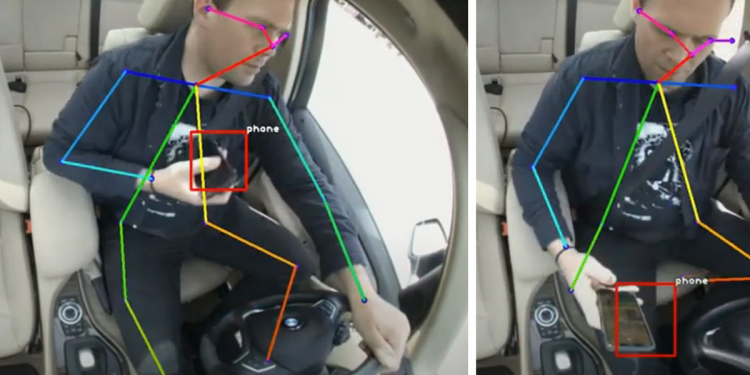

There are already some basic driver-monitoring tools on the market. Most of these systems use a camera mounted on the steering wheel, tracking the driver’s eye movements and blink rates to determine whether the person is impaired—perhaps distracted, drowsy, or drunk.

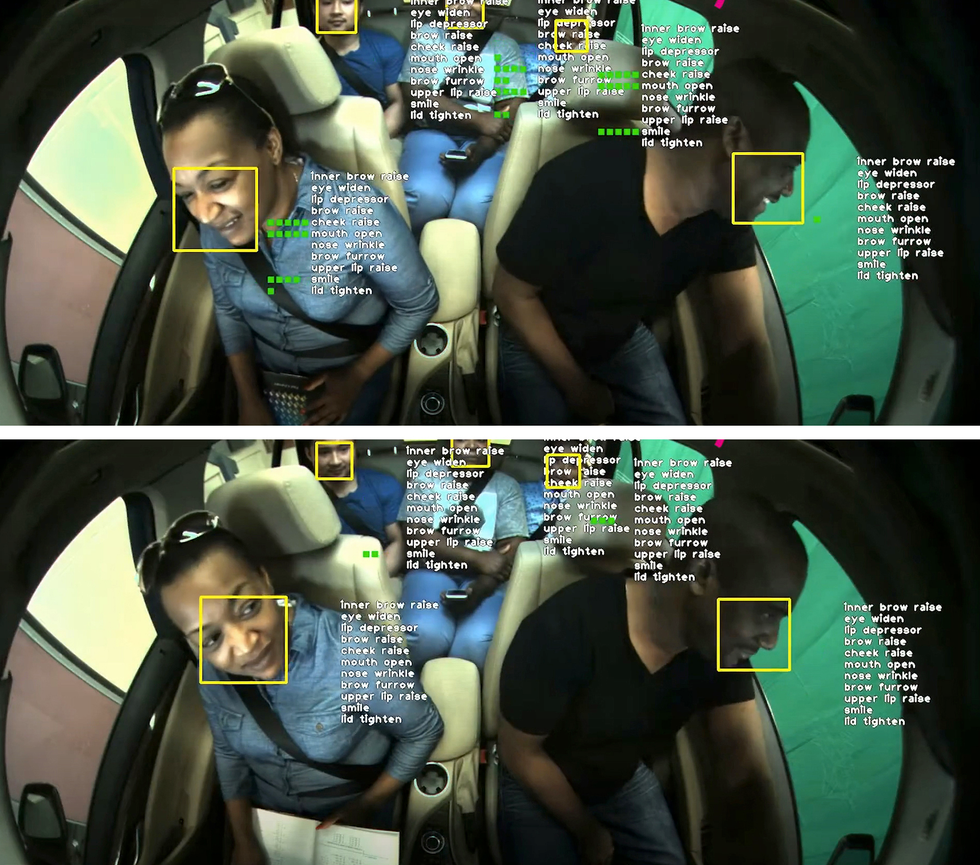

But the automotive industry has begun to realize that measuring impairment is more complicated than just making sure that the driver’s eyes are on the road, and it requires a view beyond just the driver. These monitoring systems need to have insight into the state of the entire vehicle—and everyone in it—to have a full understanding of what’s shaping the driver’s behavior and how that behavior affects safety.

If automakers can devise technology to understand all these things, they’ll likely come up with new features to offer—such as ways to improve safety or personalize the driving experience. That’s why our company, Affectiva, has led the charge toward interior sensing of the state of the cabin, the driver, and the other occupants. (In June 2021, Affectiva was acquired by Smart Eye, an AI eye-tracking firm based in Gothenburg, Sweden, for US $73.5 million.)

Automakers are getting a regulatory push in this direction. In Europe, a safety rating system known as the European New Car Assessment Program (Euro NCAP) updated its protocols in 2020 and began rating cars based on advanced occupant-status monitoring. To get a coveted five-star rating, carmakers will need to build in technologies that check for driver fatigue and distraction. And starting in 2022, Euro NCAP will award rating points for technologies that detect the presence of a child left alone in a car, potentially preventing tragic deaths by heat stroke by alerting the car owner or emergency services.

Some automakers are now moving the camera to the rearview mirror. With this new perspective, engineers can develop systems that detect not only people’s emotions and cognitive states, but also their behaviors, activities, and interactions with one another and with objects in the car. Such a vehicular Big Brother might sound creepy, but it could save countless lives.

Affectiva was cofounded in 2009 by Rana el Kaliouby and Rosalind Picard of the MIT Media Lab, who had specialized in “affective computing“—defined as computing systems that recognize and respond to human emotions. The three of us joined Affectiva at various points aiming to humanize this technology: We worry that the boom in artificial intelligence (AI) is creating systems that have lots of IQ, but not much EQ, or emotional intelligence.

Over the past decade, we’ve created software that uses deep learning, computer vision, voice analytics, and massive amounts of real-world data to detect nuanced human emotions, complex cognitive states, activities, interactions, and objects people use. We’ve collected data on more than 10 million faces from 90 countries, using all that data to train our neural-network-based emotion classifiers. Much of this labeling we did in accordance with the “facial action coding system,” developed by clinical psychologist Paul Ekman and Wallace Friesen in the late 1970s. We always pay attention to diversity in our data collection, making sure that our classifiers work well on all people regardless of age, gender, or ethnicity.

The first adopters of our technology were marketing and advertising agencies, whose researchers had subjects watch an ad while our technology watched them with video cameras, measuring their responses frame by frame. To date, we’ve tested 58,000 ads. For our advertising clients, we focused on the emotions of interest to them, such as happiness, curiosity, annoyance, and boredom.

But in recent years, the automotive applications of our technology have come to the forefront. This has required us to retrain our classifiers, which previously were not able to detect drowsiness or objects in a vehicle, for example. For that, we’ve had to collect more data, including one study with factory shift workers who were often tired when they drove back home. To date we have gathered tens of thousands of hours of in-vehicle data from thousands of participant studies. Gathering such data was essential—but it was just a first step.

The system can alert the driver that she is showing initial signs of fatigue—perhaps even suggesting a safe place to get a strong cup of coffee.

We also needed to ensure that our deep-learning algorithms could run efficiently on vehicles’ embedded computers, which are based on what is called a system on a chip (SoC). Deep-learning algorithms are typically quite large and these automotive SoCs often run a lot of other code that also requires bandwidth. What’s more, there are many different automotive SoCs, and they vary in how many operations per second they can execute. Affectiva had to design its neural-network software in a way that takes into account the limited computational capacity of these chips.

Our first step in developing this software was to conduct an analysis of the use-case requirements; for example, how often does the system need to check whether the driver is drowsy? Understanding the answers to such questions helps put limits on the complexity of the software we create. And rather than deploying one large all-encompassing deep neural-network system that detects many different behaviors, Affectiva deploys multiple small networks that work in tandem when needed.

We use two other tricks of the trade. First, we use a technique called quantization-aware training, which allows the necessary computations to be carried out with somewhat lower numeric precision. This critical step reduces the complexity of our neural networks and allows them to compute their answers faster, enabling these systems to run efficiently on automotive SoCs.

The second trick has to do with hardware. These days, automotive SoCs contain specialized hardware accelerators, such as graphics processing units (GPUs) and digital signal processors (DSPs), which can execute deep-learning operations very efficiently. We design our algorithms to take advantage of these specialized units.

To truly tell whether a driver is impaired is a tricky task. You can’t do that simply by tracking the driver’s head position and eye-closure rate; you need to understand the larger context. This is where the need for interior sensing, and not only driver monitoring, comes into play.

Drivers could be diverting their eyes from the road, for example, for many reasons. They could be looking away from the road to check the speedometer, to answer a text message, or to check on a crying baby in the backseat. Each of these situations represents a different level of impairment.

The AI focuses on the face of the person behind the wheel and informs the algorithm that estimates driver distraction.Affectiva

Our interior sensing systems will be able to distinguish among these scenarios and recognize when the impairment lasts long enough to become dangerous, using computer-vision technology that not only tracks the driver’s face, but also recognizes objects and other people in the car. With that information, each situation can be handled appropriately.

If the driver is glancing at the speedometer too often, the vehicle’s display screen could send a gentle reminder to the driver to keep his or her eyes on the road. Meanwhile, if a driver is texting or turning around to check on a baby, the vehicle could send a more urgent alert to the driver or even suggest a safe place to pull over.

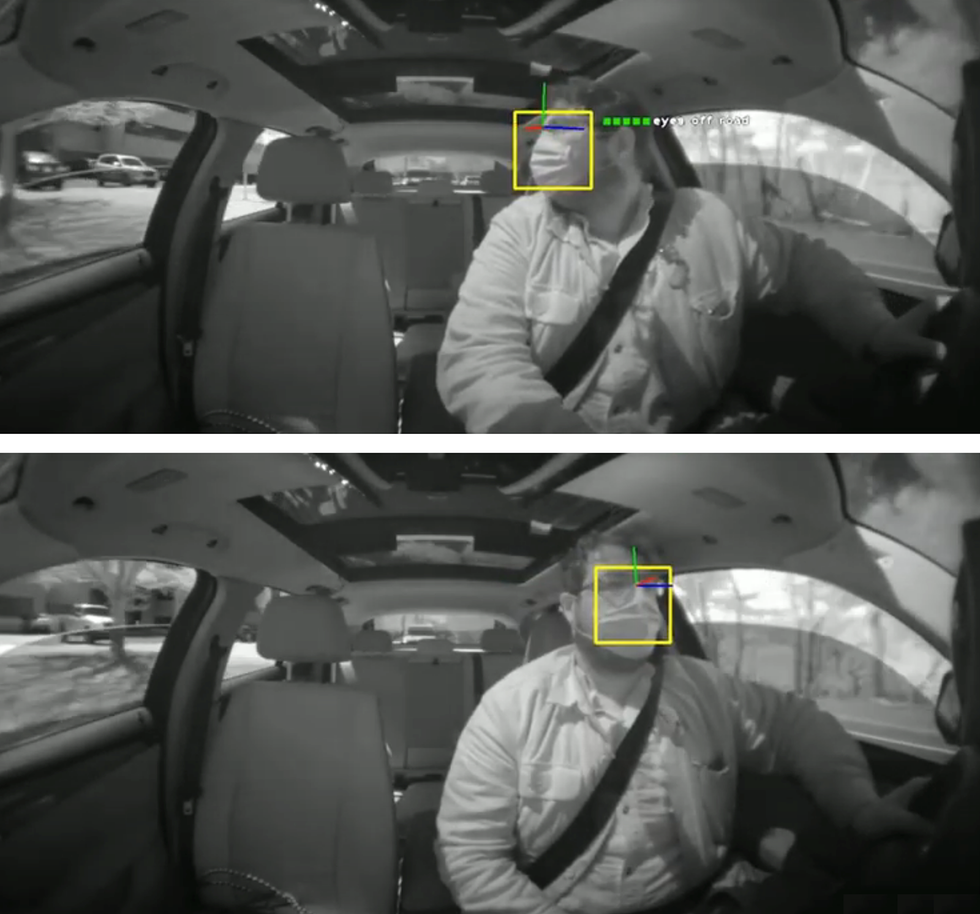

Drowsiness, however, is often a matter of life or death. Some existing systems use cameras pointed at the driver to detect episodes of microsleep, when eyes droop and the head nods. Other systems simply measure lane position, which tends to become erratic when the driver is drowsy. The latter method is, of course, ineffective if a vehicle is equipped with automated lane-centering technology.

We’ve studied the issue of driver fatigue and discovered that systems that wait until the driver’s head is starting to droop often sound the alarm too late. What you really need is a way to determine when someone is first becoming too tired to drive safely.

That can be done by viewing subtle facial movement—people tend to be less expressive and less talkative as they become fatigued. Or the system can look for quite obvious signs, like a yawn. The system can then alert the driver that she is showing initial signs of fatigue—perhaps even suggesting a safe place to get some rest, or at least a strong cup of coffee.

Affectiva’s technology can also address the potentially dangerous situation of children left unattended in vehicles. In 2020, 24 children in the United States died of heat stroke under such circumstances. Our object-detection algorithm can identify the child seat; if a child is visible to the camera, we can detect that as well. If there are no other passengers in the car, the system could send an alert to the authorities. Additional algorithms are under development to note details such as whether the child seat is front- or rear-facing and whether it’s covered by something such as a blanket. We’re eager to get this technology into place so that it can immediately start saving lives.

The AI identifies objects throughout the cabin, including a possibly occupied child’s car seat.Affectiva

Building all this intelligence into a car means putting cameras inside the vehicle. This raises some obvious privacy and security concerns, and automakers need to address these directly. They can start by building systems that don’t require sending images or even data to the cloud. What’s more, these systems could process data in real time, removing the need even to store information locally.

But beyond the data itself, automakers and companies such as Uber and Lyft have a responsibility to be transparent with the public about in-cabin sensing technology. It’s important to answer the questions that will invariably arise: What exactly is the technology doing? What data is being collected and what is it being used for? Is this information being stored or transmitted? And most important, what benefit does this technology bring to those in the vehicle? Automakers will no doubt need to provide clear opt-in mechanisms and consent to build consumer confidence and trust.

Privacy is also a paramount concern at our company as we contemplate two future directions for Affectiva’s technology. One idea is to go beyond the visual monitoring that our systems currently provide, potentially adding voice analysis and even biometric cues. This multimodal approach could help with tricky problems, such as detecting a driver’s level of frustration or even rage.

Drivers often get irritated with the “intelligent assistants” that turn out to be not so intelligent. Studies have shown that their frustration can manifest as a smile—not one of happiness but of exasperation. A monitoring system that uses facial analysis only would misinterpret this cue. If voice analysis were added, the system would know right away that the person is not expressing joy. And it could potentially provide this feedback to the manufacturer. But consumers are rightly concerned about their speech being monitored and would want to know whether and how that data is being stored.

We’re also interested in giving our monitoring systems the ability to learn continuously. Today, we build AI systems that have been trained on vast amounts of data about human emotions and behaviors, but that stop learning once they’re installed in cars. We think these AI systems would be more valuable if they could gather data over months or years to learn about a vehicle’s regular drivers and what makes them tick.

We’ve done research with the MIT AgeLab’s Advanced Vehicle Technology Consortium, gathering data about drivers over the period of a month. We found clear patterns: For example, one person we studied drove to work every morning in a half-asleep fog but drove home every evening in a peppy mood, often chatting with friends on a hands-free phone. A monitoring system that learned about its driver could create a baseline of behavior for the person; then if the driver deviates from that personal norm, it becomes noteworthy.

A system that learns continuously offers strong advantages, but it also brings new challenges. Unlike our current systems, which work on embedded chips and don’t send data to the cloud, a system capable of this kind of personalization would have to collect and store data over time, which some might view as too intrusive.

As automakers continue to add high-tech features, some of the most attractive ones for car buyers will simply modify the in-cabin experience, say to regulate temperature or provide entertainment. We anticipate that the next generation of vehicles will also promote wellness.

Think about drivers who have daily commutes: In the mornings they may feel groggy and worried about their to-do lists, and in the evenings they may get frustrated by being stuck in rush-hour traffic. But what if they could step out of their vehicles feeling better than when they entered?

Using insight gathered via interior sensing, vehicles could provide a customized atmosphere based on occupants’ emotional states. In the morning, they may prefer a ride that promotes alertness and productivity, whereas in the evening, they may want to relax. In-cabin monitoring systems could learn drivers’ preferences and cause the vehicle to adapt accordingly.

The information gathered could also be beneficial to the occupants themselves. Drivers could learn the conditions under which they’re happiest, most alert, and most capable of driving safely, enabling them to improve their daily commutes. The car itself might consider which routes and vehicle settings get the driver to work in the best emotional state, helping enhance overall wellness and comfort.

Detailed analysis of faces enables the AI to measure complex cognitive and emotional states, such as distractedness, drowsiness, or affect.Affectiva

There will, of course, also be an opportunity to tailor in-cabin entertainment. In both owned and ride-sharing vehicles, automakers could leverage our AI to deliver content based on riders’ engagement, emotional reactions, and personal preferences. This level of personalization could also vary depending on the situation and the reason for the trip.

Imagine, for example, that a family is en route to a sporting event. The system could serve up ads that are relevant to that activity. And if it determined that the passengers were responding well to the ad, it might even offer a coupon for a snack at the game. This process could result in happy consumers and happy advertisers.

The vehicle itself can even become a mobile media lab. By observing reactions to content, the system could offer recommendations, pause the audio if the user becomes inattentive, and customize ads in accordance with the user’s preferences. Content providers could also determine which channels deliver the most engaging content and could use this knowledge to set ad premiums.

As the automotive industry continues to evolve, with ride sharing and autonomous cars changing the relationship between people and cars, the in-car experience will become the most important thing to consumers. Interior sensing AI will no doubt be part of that evolution because it can effortlessly give both drivers and occupants a safer, more personalized, and more enjoyable ride.

Source by feedproxy.google.com